Infrastructure

This page is about the infrastructure of JH0project, where we host our websites (e.g. what you looking at) and services (e.g. DNS, databases).

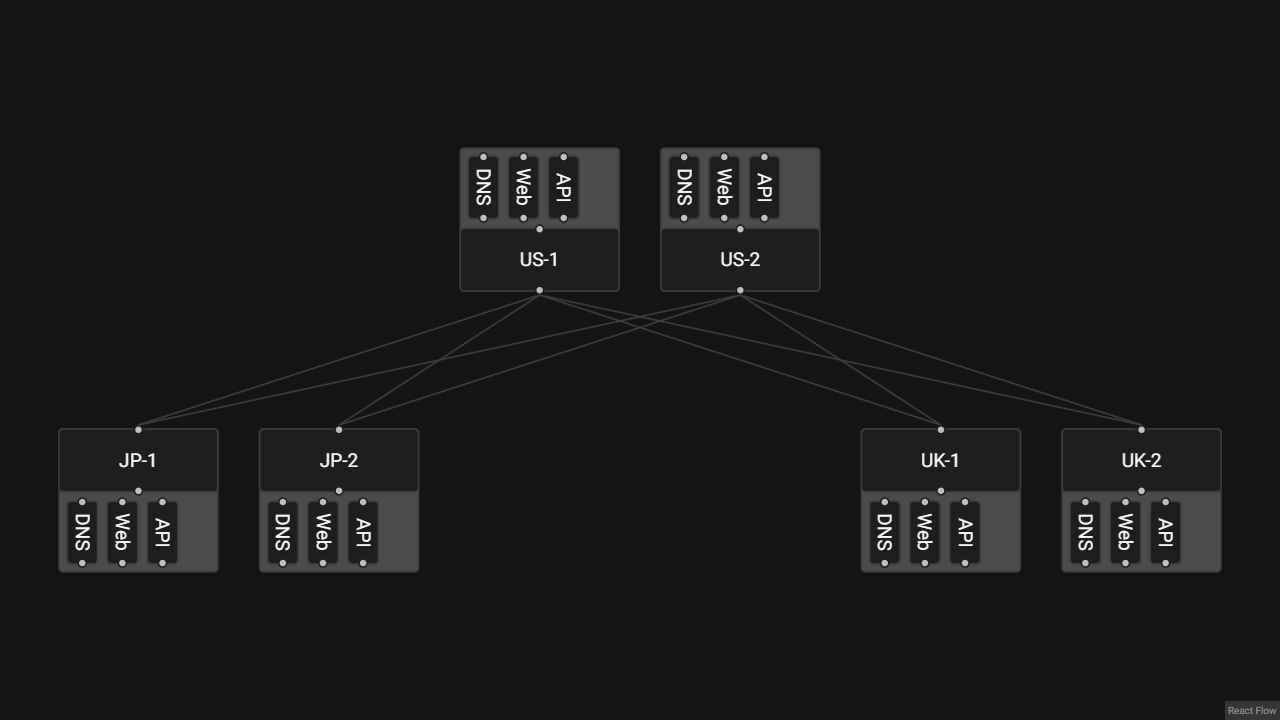

JH0project operates on a global distributed infrastructure, across multiple regions and continents including Japan, US West, and UK. This allow us to keep our infrastructure to be highly available, fault tolerant, and low latency. This come with a cost, where each region only contain 2 low power servers (Spec: 2 CPU cores, 12G Ram), making a unique custom architectural design must be made to achive the goal, and with ease of management.

Architecture

The infrastructure is designed to be uniform, without any dedicated server for specific services. Same set of containerized services are deployed across all servers, this ensure that all services are design to be stateless, can be scaled horizontally, and same configuration can be applied to all servers, making it easier to manage and maintain.

Software Stack

Databases

We're using CockroachDB a distributed SQL database that is compatible with PostgresDB, as out primary database. The reason for choosing CockroachDB out of researched database is that, it able to distributed across multiple regions in active-active (eliminated PostgreSQL), with PrismaORM support (eliminated YugabyteDB), and its a CP database (eliminated Cassandra).

We are also hosting KeyDB for caching, mainly for Next.js. Since everytime we deploy new version or restart the Next.js website, it will clear the cache, including the cache for the computationally expensive image optimization. Not only that, we would like to share the cache between all servers, so each server don't need to render the image again. To achive this, we're using KeyDB with multiple master setup, with one master per server, where all master replicate each other through asynchronous replication. This allow us to use KeyDB as a single asynchronous cache, where we can directlly read and write to the KeyDB on same server, not having to deal with the replication or choosing the right server to write.

DNS

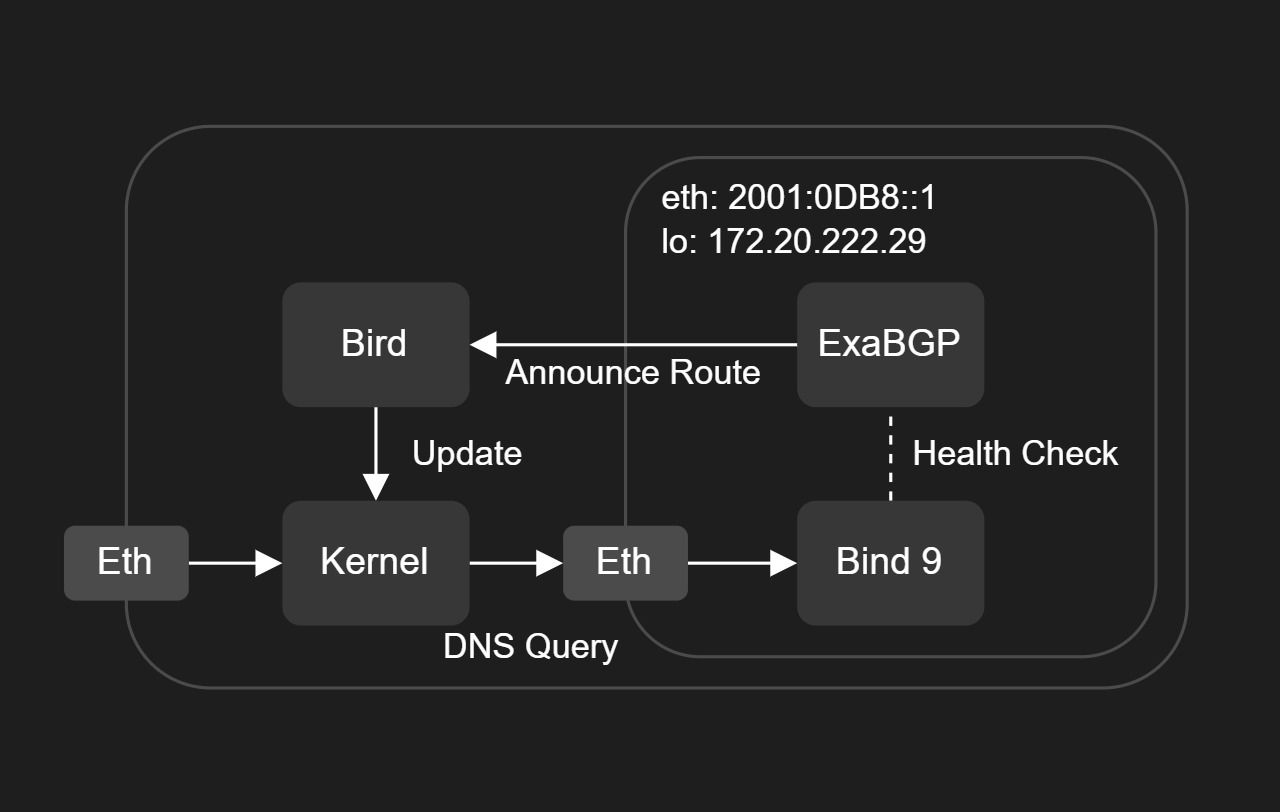

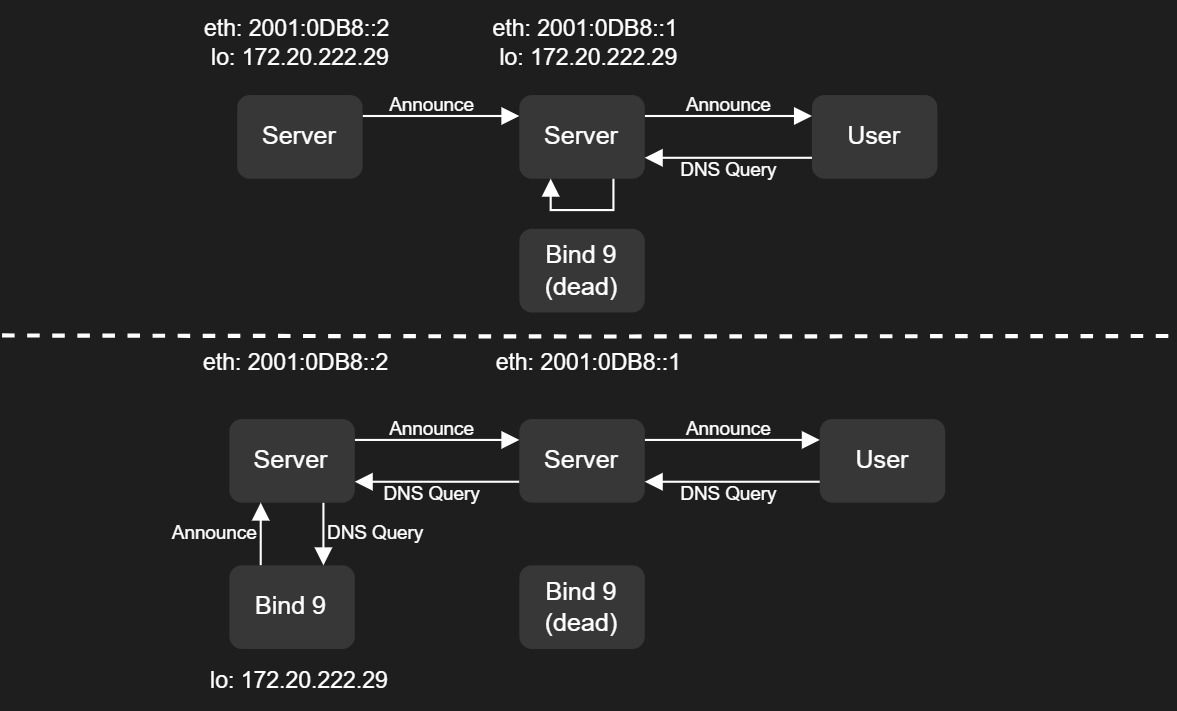

We host our own BIND 9 DNS server that are mainly for DN42 network. To allow easy management and versioning of Bind 9 config and the DNS records, we containerized the Bind 9 server and run it as docker container on each server. The DNS records are DNSSEC signed, and the DNS server are configured to use DNS over TLS (DoT) for secure DNS query. To allow it to be highly available, we use anycast to announce the DNS server IP address, and use BGP to route the traffic to the nearest server. When one of the server goes down, the traffic will be routed to the next nearest server allowing active active DNS server. Since we're using ECMP, the traffic will be distributed evenly across servers without any special configuration.

In the container, ExaBGP will monitor the health of the DNS server, and announce the anycast address to Bird, which will then announce the anycast address to the BGP peers. The BGP peers are configured to use ECMP to distribute the traffic evenly across all servers.

In the container, ExaBGP will monitor the health of the DNS server, and announce the anycast address to Bird, which will then announce the anycast address to the BGP peers. The BGP peers are configured to use ECMP to distribute the traffic evenly across all servers.

Why we announce it in the container? Because we can't set the server loopback IP address as the anycast address. If the Bind 9 server goes down, while it will route the traffic to the next nearest server, all DNS query go through the server will be accepted-ish by the server, but no response will be sent back.

Why we announce it in the container? Because we can't set the server loopback IP address as the anycast address. If the Bind 9 server goes down, while it will route the traffic to the next nearest server, all DNS query go through the server will be accepted-ish by the server, but no response will be sent back.

Infrastructure Management

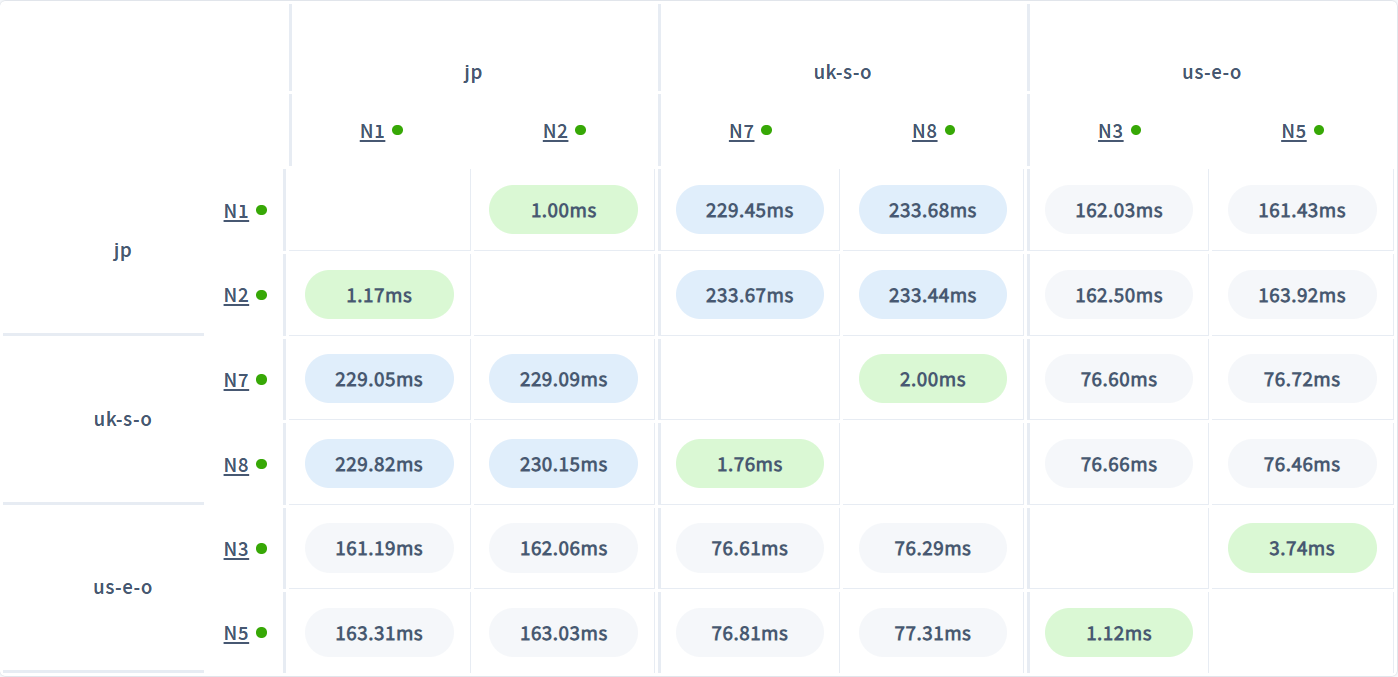

All service are containerized and running on barebone Docker, managed by Coolify. The reason behind this is that the regional latency is too high (max regional latency is 266ms) for Kubernetes, more specificly etcd won't able to elect leader, nor sync.

Regional latency show in CockroachDB Console

Regional latency show in CockroachDB Console

Besides there's only two servers in each region that are powerful enough to run Kubernete (API server), so we can't form a cluster per region. Not to mention we only have few services to manage, so the overhead of Kubernetes plus other services like FluxCD/ArgoCD, CSI, Seal Secret for managing it is not worth it.

Luckly, when we're looking for a solution Coolify released multiple server support (In Beta). Coolify is a really cool app you should it check out.